Researchers have found that the action of a "driver" in an autonomous vehicle is accepted with more forgiveness than the action of a driver in a regular vehicle

Decision making is a cognitive process in which people can choose from several options. This process can be rational (based on examining all possibilities and using judgment) or irrational (based on instincts and intuition). Research in the field examines how people perceive and process the information presented to them, choose between the options and make a decision.

Prof. Simon Moran from the Department of Management and Dr. Amos Shur from the Department of Business Administration and the Center for Decision Making and Economic Psychology at Ben Gurion University are researchers in the field of judgment and decision making. Their research focuses on topics such as negotiation, cooperation, competitiveness, moral behavior, consumerism, deception, interpersonal interactions, and human-to-human behavior.

In their latest study, which won a grant from the National Science Foundation, the researchers wanted to examine if and how a decision made in an automated environment affects the way people judge it; And more precisely, if there is a difference in the way people judge a driver of an autonomous vehicle compared to a driver of a regular vehicle who got into a car accident through no fault of their own. Today there are vehicles that are equipped with an autonomous driving system, but they still require a driver (similar to medical robotic equipment that still requires the intervention and supervision of a human factor). That is, their use requires cooperation and interaction between man and machine.

"As researchers in the field of judgment and decision-making, automation interests us because it leaves a default. If the person does not decide and does not act - the machine will do it in his place. And the judgment of the onlooker will likely be influenced by the person's attitude to the default - whether he accepted it or exceeded it," explains Professor Moran.

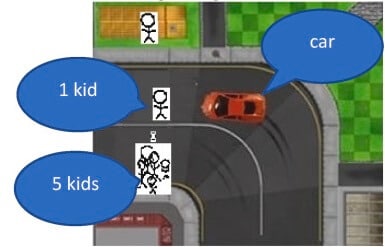

As part of the study, the researchers examined approximately 1,800 subjects from the general population (who belong to Amazon's research platforms). They presented them with scenarios in text or a diagram describing an unavoidable accident - for half of them a scenario where the driver drives an autonomous vehicle and for half a scenario where the driver drives a regular vehicle. In one of the scenarios, the driver was driving and five children passed in the crosswalk in front of him. Since he could no longer stop in time due to a curve, his options were to continue straight and hit all the children (the default) or deviate and hit one child (a utilitarian action). The subjects were told which option the driver chose (continue straight or swerve) and were then asked, among other things, how moral his behavior was, how good his intentions were, and what punishment he should receive for hitting a pedestrian or pedestrians.

It was found that the driver of the normal car was judged by the subjects according to the damage he caused. The greater the damage, the less moral the driver and vice versa. "It didn't matter to the subjects whether the driver continued straight or turned, but how many children were run over. "Minimum damage, and not the driver's actions, was the main criterion by which he was judged," explains Dr. Shor.

In contrast, the driver of the autonomous vehicle is judged by his action. If she deviated, no matter how many children she hurt, she was seen as more moral. "In this case, the subjects expected the driver to act and not let the vehicle decide on its own. That is, he received moral credit from the very fact that he acted and exercised judgment, even if the action ended with a less favorable result, and thus he was also perceived as having good intentions," adds Dr. Shor.

The subjects also stated that the driver of the autonomous vehicle is entitled to the lightest punishment if he turned the steering wheel (that is, he acted) and an increased punishment if he did not turn the wheel and let the vehicle continue straight because he did not decide anything (that is, he did not act). The driver of the normal car was judged as mentioned according to the level of damage (the number of victims). And so, no matter how he acted, we noted that the harshest punishment goes to the driver who hit the most children, and vice versa.

According to these findings, it can be understood that when encountering a situation in which unavoidable damage is caused in an automatic environment, the active behavior of the person is judged with greater leniency. According to Prof. Moran, "in the presence of automation, in particular during an injury or an unavoidable accident that presents us with a moral dilemma (who to harm and who to save), moral judgment is based on intention, action / inaction. Without automation, moral judgment is based on the end result. The expectation from those who are in an automated environment is to act, exercise moral judgment and take responsibility. It seems that taking action in this environment is seen as having a good intention, as an attempt to save the situation, and is therefore judged less harshly even if it leads to less favorable results. Conversely, not taking action in this environment and leaving the moral decision in the hands of the machine is seen as an escape from responsibility and is judged harshly. In the normal environment, a person is not judged according to his actions, but according to the result (the extent of the actual damage)". According to Dr. Shor, "these conclusions can contribute to the establishment of a policy according to which autonomous systems will be programmed so that the human factor can intervene in their operation more easily."

Indeed, in the follow-up project, the researchers plan to examine the programming of the autonomous systems. "The questions that will be asked are how much authority should be given to the programmers in designing an autonomous vehicle, which rule should guide them - protecting the driver or reducing damage (two possible rules in the production of autonomous vehicles), and how will they feel following harmful programming", Prof. Moran concludes.

Life itself:

Prof. Simon Moran, 57, married + two (ages 27 and 25, graduates of medical / management studies), lives in Mitar settlement. Immigrated from South Africa at the age of 10. During her academic career she was a guest at the Harvard Business School and the Wharton School of Business at the University of Pennsylvania.

Dr. Amos Shor, 45, married + four daughters (15, 13, 9, 6), lives in Jerusalem. A sabbatical year will soon begin at the University of California at San Diego in the USA (UCSD).

More of the topic in Hayadan:

- voice system for navigation

- "People are willing to tolerate the death of 35 people from vehicles driven by humans, but will not be able to tolerate one death from a robotic vehicle

- What is the most expensive part of an electric vehicle?

- IBM registered a patent for a system for transferring control of the vehicle between the driver and the autonomous car - according to the risk on the way

- Automatic, not autonomous